自动摘要: 参考链接: 1.[https://pytorch.org/docs/stable/generated/torch.nn.Conv1d.htmltorch.nn.Conv1d](https://p ……..

参考链接:

- https://pytorch.org/docs/stable/generated/torch.nn.Conv1d.html#torch.nn.Conv1d

- https://github.com/pytorch/tutorials/blob/082c8b1bddb48b75f59860db3679d8c439238f10/advanced_source/static_quantization_tutorial.rst#L373

- 简单来说如下:

- 每conv层是 w1x+b1 ,两层就是 w2(w1x+b1)+b2 , 合并成一层就是 w2w1x+w2b1+b2 ;

- 新的权重就是 w2w1, 新的偏置就是 w2*b1+b2

- 注意偏置计算!!!

- 是矩阵乘上一层的权重,写的是否注意顺序;

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66import torch

import torch.nn as nn

import time

class MyModel(nn.Module):

def __init__(self, feature_size=512, hidden_dim=512):

super(MyModel, self).__init__()

self.hidden_dim = hidden_dim

# 定义多个 nn.Conv1d 层

self.fc_0 = nn.Conv1d(feature_size, hidden_dim * 4, 1)

self.fc_1 = nn.Conv1d(hidden_dim * 4, hidden_dim, 1)

self.fc_2 = nn.Conv1d(hidden_dim, hidden_dim // 2, 1)

w0, w1, w2 = self.fc_0.weight.reshape(hidden_dim * 4, -1), self.fc_1.weight.reshape(hidden_dim , -1), self.fc_2.weight.reshape(hidden_dim // 2, -1)

b0, b1, b2 = self.fc_0.bias.reshape(hidden_dim * 4, -1), self.fc_1.bias.reshape(hidden_dim , -1), self.fc_2.bias.reshape(hidden_dim // 2, -1)

# 将权重和偏置参数展开并合并

combined_weight = torch.mm(w2, torch.mm(w1, w0))

combined_bias = b2 + torch.mm(w2, b1) + torch.mm(torch.mm(w2, w1),b0)

# 创建新的 nn.Conv1d 层

self.fc_combined = nn.Conv1d(feature_size, hidden_dim // 2, 1)

self.fc_combined.weight = nn.Parameter(combined_weight.unsqueeze(-1))

self.fc_combined.bias = nn.Parameter(combined_bias.squeeze(-1))

def forward(self, features):

net = self.fc_combined(features)

return net

def forward2(self, features):

net2 = self.fc_2(self.fc_1(self.fc_0(features)))

return net2

def check(self, features):

net = self.fc_combined(features)

net2 = self.fc_2(self.fc_1(self.fc_0(features)))

print((net - net2).sum())

def t(fun):

def inner():

s= time.time()

fun()

print("time cost: ", time.time() - s)

return inner

# 创建模型

model = MyModel()

def f():

model.check(torch.randn(1, 512, 1024))

def f2():

model(torch.randn(1, 512, 1024))

def f3():

model.forward2(torch.randn(1, 512, 1024))

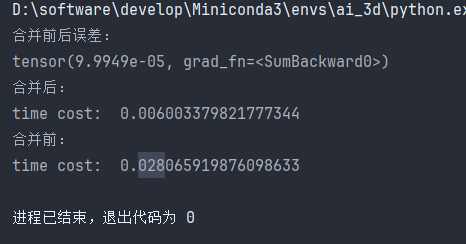

print("合并前后误差:")

f()

print("合并后:")

f2()

print("合并前:")

f3()

- 是矩阵乘上一层的权重,写的是否注意顺序;